Introduction

After having experimented with the Django Rest Framework, I decided it was time to experiment with the FastAPI framework.

Fast API was specially designed to be a lean framework, it is not an batteries-included framework like for example Django Rest Framework.

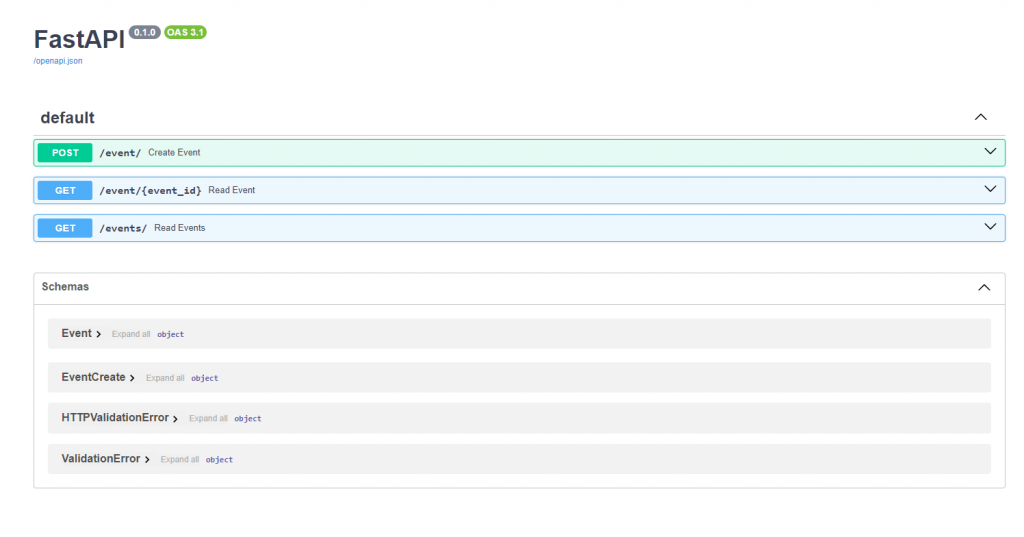

However, this may be to its advantage, as you are for example free to chose which ever ORM to choose. The one thing you get for free, apart from providing an API Framework, is an OpenAPI implementation.

Prerequisites

What you need to following along are the following:

- You need to have Python installed. Also some knowledge of programming in Python would be very practical.

- Either a local or a remote instance of a Postgres database. Make sure you have the permission to create databases and tables.

- An IDE, for this project I am using Visual Studio Code, but PyCharm is another great option.

- If we go further, PgAdmin is a handy tool to have around

- Also Postman is quite handy for testing.

Setting up the virtual environment

When working with Python it is a best practice to set up a virtual environment. To do this, in the directory where you want to put your project type:

python -m venv envNext, execute the appropiate activate-script for your OS in the env/Scripts directory.

And if you do not feel like typing along, you can find the completed code here.

Preliminaries

Open your IDE in an empty directory and add a ‘requirements.txt’:

alembic==1.12.0

annotated-types==0.5.0

anyio==3.7.1

asyncpg==0.28.0

click==8.1.7

colorama==0.4.6

fastapi==0.103.2

greenlet==3.0.0

gunicorn==21.2.0

h11==0.14.0

idna==3.4

Mako==1.2.4

MarkupSafe==2.1.3

packaging==23.2

psycopg2==2.9.9

pydantic==2.4.2

pydantic_core==2.10.1

sniffio==1.3.0

SQLAlchemy==2.0.21

starlette==0.27.0

typing_extensions==4.8.0

uvicorn==0.23.2

Now in your terminal or commandline (also open in the same directory) type:

pip install -r requirements.txtWhat will be build

We will build a simple Web API to create and query events, like festivals. I kept the database to an absolute minimum and you may extend this at will. Also, because I plan to deploy this API to Kubernetes in a later blog, automatic migrations using alembic are also built in. For now we will just run it locally.

The code

In your directory create a ‘utils.py’ file:

from os import getenv

def fetch_database_uri()->str:

db_name=getenv('POSTGRES_DB','fasteventsapi')

db_user=getenv('POSTGRES_USER','postgres')

db_password=getenv('POSTGRES_PASSWORD','<your db password>')

db_host=getenv('POSTGRES_HOST','localhost')

db_port=getenv('POSTGRES_PORT','5432')

return f'postgresql://{db_user}:{db_password}@{db_host}:{db_port}/{db_name}'This simply reads the values from the environment, and in case there is none, it sets it to the default value.

Now we come to the models: in your directory create a ‘models.py’ file:

from sqlalchemy import Column,Integer,String

from sqlalchemy.ext.declarative import declarative_base

Base = declarative_base()

class Event(Base):

__tablename__ = 'events'

id = Column(Integer, primary_key=True)

name = Column(String)

description = Column(String)

location = Column(String)

Some short notes:

- Here we define the model. __tablename__ is the name of the databasetable

- Next we define the four columns, id is the primary key, the rest are text-fields

- These are the models in the database.

Next we create the pydantic models, which are returned from the API, in the ‘schemas.py’ file:

from pydantic import BaseModel

class EventBase(BaseModel):

name: str

description: str = None

location: str = None

class EventCreate(EventBase):

pass

class Event(EventBase):

id: int

class Config:

from_attributes = TrueA short explanation:

- The EventBase class is the base class for all our Event-classes

- The EventCreate class is basically the the same as the EventBase class. We use this for creating events. As you can see it lacks an id, which is not needed to create an Event.

- The Event class however has an id, and an inner Config class.

Now continue to add a ‘database.py’ where we will define two important variables:

from sqlalchemy import create_engine

from sqlalchemy.orm import sessionmaker

from utils import fetch_database_uri

engine=create_engine(fetch_database_uri())

SessionLocal=sessionmaker(autocommit=False, autoflush=False, bind=engine)

A Session according to the documentation manages manages persistence operations for ORM-mapped objects. We will need this later on.

To make life a bit easier for use, we will put the crud, or rather create and retrieve operations in a new file called ‘crud.py’:

from sqlalchemy.orm import Session

import models

import schemas

def get_event(db: Session, event_id: int):

return db.query(models.Event).filter(models.Event.id == event_id).first()

def get_events(db: Session, skip: int = 0, limit: int = 100):

return db.query(models.Event).offset(skip).limit(limit).all()

def create_event(db: Session, event: schemas.EventCreate):

db_event = models.Event(name=event.name, description=event.description, location=event.location)

db.add(db_event)

db.commit()

db.refresh(db_event)

return db_event

The methods seems quite self-explanatory, some notes:

- Note how the classes in models are used for database queries, and the classes in schemas are used for communicating with the API itself: in the create_event() method, where a schemas.EventCreate class is provided, and a models.Event class is eventually returned

- Error checking is not done in this code, this should of course be built in, if this code were to go to production

Adding migrations

For the migrations we will use alembic.

In your terminal type:

alembic init alembicThis generates an ‘alembic’ directory, and in it you will find and ‘env.py’ which we need to change:

from logging.config import fileConfig

from os import getenv

from sqlalchemy import engine_from_config,create_engine

from sqlalchemy import pool

from sqlalchemy.ext.declarative import declarative_base

from alembic import context

from utils import fetch_database_uri

Base=declarative_base()

TargetMetaData=Base.metadata

config = context.config

if config.config_file_name is not None:

fileConfig(config.config_file_name)

target_metadata = None

def run_migrations_offline() -> None:

context.configure(

url=fetch_database_uri(),

literal_binds=True,

target_metadata=TargetMetaData,

)

with context.begin_transaction():

context.run_migrations()

def run_migrations_online() -> None:

engine=create_engine(fetch_database_uri(),poolclass=pool.NullPool)

with engine.connect() as connection:

context.configure(

connection=connection,

target_metadata=TargetMetaData

)

with context.begin_transaction():

context.run_migrations()

if context.is_offline_mode():

run_migrations_offline()

else:

run_migrations_online()

This code

- Fetches the right Base and MetaData

- The either runs the migrations on or offline

Now type:

alembic revision --autogenerate -m "Initial migration"

And next apply the migration to the database:

alembic upgrade headThis creates an ‘alembic_version’ table in your database. You can check it out with PgAdmin

Now it is time to add the events table to your database

In the terminal type:

alembic revision -m "add new table"In your ‘alembic directory’ you will find a file with a name like ‘add_new_table.py’ at the end of its name. Now open that file and change the upgrade() method to look like this:

def upgrade() -> None:

op.create_table(

'events',

sa.Column('id', sa.Integer, primary_key=True,autoincrement=True),

sa.Column('name', sa.String(100), nullable=False),

sa.Column('description', sa.String(1000), nullable=True),

sa.Column('location', sa.String(100), nullable=True)

)Next we need to be able to roll back the migration:

def downgrade() -> None:

op.drop_table('events')Next type in your terminal:

alembic upgrade headThe API

Add an ‘app.py’ file to your directory and these preliminaries:

from fastapi import Depends, FastAPI, HTTPException

from sqlalchemy.orm import Session

from alembic.config import Config

from alembic import command

import models

import crud

import schemas

import database

models.Base.metadata.create_all(bind=database.engine)

def get_db():

try:

db = database.SessionLocal()

yield db

finally:

db.close()A lot is happening here already:

- We import all the necessary classes

- Then we create the metadata (table names and definitions and such)

- We define a function to fetch the database from our local session

Next we create the app:

app = FastAPI()

@app.post("/event/", response_model=schemas.Event)

def create_event(event: schemas.EventCreate, db: Session = Depends(get_db)):

return crud.create_event(db=db, event=event)

@app.get("/event/{event_id}", response_model=schemas.Event)

def read_event(event_id: int, db: Session = Depends(get_db)):

db_event = crud.get_event(db=db, event_id=event_id)

if db_event is None:

raise HTTPException(status_code=404, detail="Event not found")

return db_event

@app.get("/events/", response_model=list[schemas.Event])

def read_events(skip: int = 0, limit: int = 100, db: Session = Depends(get_db)):

events = crud.get_events(db, skip=skip, limit=limit)

return events

@app.on_event("startup")

def startup_event():

try:

alembic_cfg = Config("alembic.ini")

command.downgrade(alembic_cfg, "base")

command.upgrade(alembic_cfg, "head")

except:

passAgain, some short notes:

- We create the API in the app variable, to which we assign several routes

- The downgrade is needed in order for us to create the ‘alembic_version’ table which is needed for us to keep track of our migrations

- We perform the migrations in a try-except block. Sometimes alembic tries to create a table twice, I have to find a way around that. For now this seems the best way, but this should by no means appear in production code.

- Every route has a HTTP-verb, and a response model. They also in the case of create_event() and read_event() get some parameter. The Depends parameter does some dependency injection that it is, injects the database.

- The on_event handler is called when the API is starter, and does a database migration when needed.

Testing it

You can now start testing your newly minted API by typing the following in your terminal or commandline:

uvicorn app:app --port 8100 --reloadNow go to http://127.0.0.1:8100/docs and you should see something like this:

Play with it, extend it, and test it.

Conclusion

My main motivation for experimenting with Fast API was that I wanted to try an alternative to Django Rest Framework which was less bloated. FastAPI seems to fit that bill. Although not as easy to develop for as Django, especially the database migrations which are built into Django, it is still pretty easy and straightforward. In a later article I hope to focus bit more on FastAPI’s performance.

One feature of FastAPI that would win me over is the fact that it has Swagger/OpenAPI integration more or less out of the box.