Introduction

For the past couple of years I have been reading about Django and the Django Rest Framework, and especially about the good Developer Experience it offers. This of course spiked my curiousity, and I decided to perform a small experiment and build the following:

- A Django Rest Framework app for administering events, like festival. Mind you: I kept this very simple

- This app access a postgresql database

- The Django app is containerized

- Together with a postgres container, it must be deployed to a Kubernetes cluster. For that I use minikube locally

The code to the whole project can be found in this Github repository.

Prerequisites

You will need a couple of things:

- Preferably the latest version of Python. You can download it from here.

- An installation of postgres, and an installation of pgAdmin, the graphical administration tool for Postgres.

- An IDE like PyCharm or Visual Studio Code.

- An installation of the Docker desktop

- A Docker hub account so you can push your image to the docker hub.

- And minikube installed (or another local kubernetes cluster)

Also, some basic knowledge of Python would be good, and access to a terminal or a commandline

Getting started

Open your commandline or terminal in an empty directory and type:

python -m venv env

env\Scripts\activate

# This is for windowsOr on Linux/Mac:

source env\bin\activateThis sets up a virtual environment, so we can install all our packages locally

Now open your favourite IDE in this same directory and add a ‘requirements.txt’ file with the following content:

django

djangorestframework

psycopg2

Save this file, and enter in your terminal or commandline:

pip install -r requirements.txt

This will install the requirements. We use psycopg2 to connect to the postgres database, and we need the django frameworks.

After this is done, type in your commandline:

django-admin startproject webevents .Mind the period at the end of this command. Next type:

python manage.py startapp eventsapiThat is it. To test our setup:

python manage.py runserverNow head over to your browser and go to http://127.0.0.1:8000 and you should see the django starting page.

If all works well, this is it, the basic setup is done

The Model

Go to the models.py file in your eventsapi directory and add this WebEvent model:

class WebEvent(models.Model):

name = models.CharField(max_length=100)

location = models.CharField(max_length=100)

date = models.DateField()

time = models.TimeField()

description = models.TextField()

created_at = models.DateTimeField(auto_now_add=True)

def __str__(self):

return self.nameA short description:

- an id is always added implicitly by the framework

- Every event has a location, a description, a date and a time.

- Because we like to know when an event is added, the created_at field is added, where the current datetime is automatically filled in.

- The __str__(self) is needed to show a string representation of the model, for now it is just the name.

In order for our model to show up in the admin interface, we need to register this. Go to the ‘admin.py’ file in the eventsapi directory, and replace the contents with this:

from django.contrib import admin

from eventsapi.models import WebEvent

# Register your models here.

admin.site.register(WebEvent)But to show up in the api, we also need a serializer. Add a ‘serializers.py’ in your eventsapi directory, and add the following code:

from rest_framework import serializers

from eventsapi.models import WebEvent

class WebEventSerializer(serializers.ModelSerializer):

class Meta:

model = WebEvent

fields = ['id', 'name', 'location', 'date', 'time', 'description']

Some explanation is needed:

- The WebEventSerializer class is derived from the ModelSerializer. This is needed so the contents of the different objects are shown in the UI, and in the resulting JSON

- The class Meta defines which class is serialized, and what fields within the class are serialized.

The View

Now open the ‘views.py’ and replace the existing code with the following:

from django.shortcuts import render

from rest_framework import generics

from eventsapi.models import WebEvent

from eventsapi.serializers import WebEventSerializer

# Create your views here.

class WebEventList(generics.ListCreateAPIView):

queryset = WebEvent.objects.all()

serializer_class = WebEventSerializer

class WebEventDetail(generics.RetrieveUpdateDestroyAPIView):

queryset = WebEvent.objects.all()

serializer_class = WebEventSerializerI am using generic views here, a quite powerful feature of the Django Rest Framework:

- ListCreateAPIView: used for read-write endpoints to represent a collection of objects

- RetrieveUpdateDestroyAPIView: Used for a read-write-delete enpoint on a single instance.

Defining the URLS

In order to access the endpoints, we need URLs. In your eventsapi directory add a ‘urls.py’ file with the following contents:

from django.urls import path

from eventsapi.views import WebEventList, WebEventDetail

urlpatterns=[

path('events/', WebEventList.as_view()),

path('events/<int:pk>/', WebEventDetail.as_view()),

]

A short breakdown of this code:

- The ‘events/’ path will direct to the list-view and return the list of WebEvents in the database

- The ‘events/<int:pk>’ path gets an extra parameter for the view, namely the pk or primary key. The view will use this to retrieve the item with this primary key.

That is it, the api is set up, now we need to integrate it into our project

The Settings file

Open the ‘settings.py’ file in the webevents directory and add this line under ‘from pathlib import Path’

import osWe will need this to access the environment variables later on.

Now change the ALLOWED_HOSTS to:

ALLOWED_HOSTS = ["*"]This will allow access from all hosts, this is needed later on when we deploy to Kubernetes

Now scroll down to the DATABASES and changes that to the following:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql',

'NAME': os.getenv('POSTGRES_DB','pythoneventsapi'),

'USER': os.getenv('POSTGRES_USER','postgres'),

'PASSWORD': os.getenv('POSTGRES_PASSWORD','secret1234'),

'HOST': os.getenv('HOST','localhost'),

'PORT': os.getenv('PORT','5432'),

}

}For the app to connect to a postgres database it needs the following:

- The engine, this is a constant string as you can see

- The name of the database in ‘NAME’. We use the os.getenv() method with a default so we can still run it locally if needed. Once deployed these values will be stored in environment variables.

- The database user with access to the database in ‘USER’ with its accompanying password in ‘PASSWORD’

- Since when we deploy to Kubernetes the hostname, in ‘HOST’, of the database will be different, we also get it from the environment but we still default to localhost for local development

- The same goes for the port

That is it for the settings file

Another URLs file

Open the ‘urls.py’ file in the main project and replace it with this code:

from django.contrib import admin

from django.urls import path, include

urlpatterns=[

path('admin/', admin.site.urls),

path('', include('eventsapi.urls')),

]Here we define the ‘admin’ route for the admin console and the two events-routes from the eventsapi

Migrating

In your terminal now type:

python manage.py makemigrations eventsapi

This will create a migrations directory in the eventsapi directory.

Now if that went well type:

python manage.py migrateThat is it, now all we need to do is create a superuser:

python manage.py createsuperuser

Now we can put it to the test:

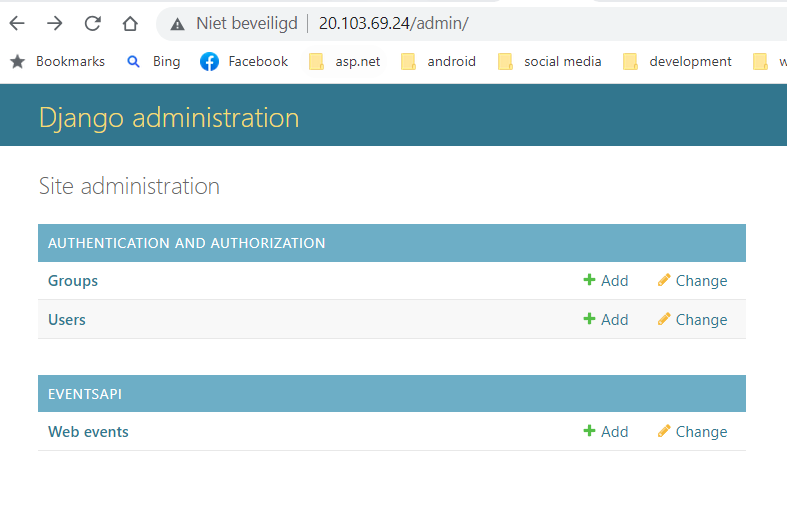

python manage.py runserverOpen your browser at http://127.0.0.1:8000/admin and log in. You should the WebEvents table on the left side, try adding a new event

Now open http://127.0.0.1:8000/events and you will see the list of events

If this all works, it is time to deploy this thing to a Kubernetes cluster

Dockerize your app

Before we can start on the real Dockerfile, we need to add the following shell-script, put this in your root directory and name it entrypoint.sh. In this file we will do the database migrations and create a superuser, and run the server:

#!/bin/bash

python manage.py migrate

echo "from django.contrib.auth.models import User; User.objects.create_superuser('$DJANGO_SUPERUSER_USERNAME','$DJANGO_SUPER_USER_EMAIL','$DJANGO_SUPERUSER_PASSWORD')" | python manage.py shell

python manage.py runserver 0.0.0.0:8000

As you can see we use some environment-variables such as $DJANGO_SUPERUSER_USERNAME. We will define them in the Dockerfile. Add the ‘Dockerfile’ in the root directory as well and add these contents:

FROM python:3.11.4-slim-buster

WORKDIR /usr/src/app

ENV PYTHONDONTWRITEBYTECODE 1

ENV PYTHONUNBUFFERED 1

ENV DJANGO_SUPERUSER_USERNAME admin

ENV DJANGO_SUPER_USER_EMAIL info@test.com

ENV DJANGO_SUPERUSER_PASSWORD secret1234

RUN pip install --upgrade pip

COPY ./requirements.txt /usr/src/app

RUN apt-get update \

&& apt-get -y install libpq-dev gcc

RUN pip install -r requirements.txt

COPY . /usr/src/app

EXPOSE 8000

CMD ["./entrypoint.sh"]A step by step explanation

- As the base image we use the python-3.11.4 slim base image. At the time of writing this is the latest version of Python. Feel free to update if needed

- Our workdir will be /usr/src/app

- The PYTHONDONTWRITEBYTECODE means no .pyc will be written on the import of source files. This saves on imagesize.

- The PYTHONUNBUFFERED environment makes sure output to stdout and stderr is unbuffered so we see the logs without delay

- Next we see the three environment variables needed for our entrypoint script

- We upgrade pip to its latest version

- We copy the ‘requirements.txt’ file to the workdirectory

- Next we update the image and install libpq and gcc. This is needed to install the psycopg2 package

- Now we can safely run pip install to install the packages

- The rest of the source is copied

- We expose port 8000 to the outside world

- Finally we call our shellscript to start the server.

Building the image

Now we can build the image:

docker build -t <your dockerhubusername>/webeventspython:v1 .

Do not forget the period at the end of the command. This can take some time, so be patient.

Pushing the image

If you are not already logged in to the docker hub, type:

docker loginNow that you are logged in you can push the image. Depending on your internet speed this can take some time:

docker push <your dockerhubusername>/webeventspython:v1Now that is out of the way, we can start on our Kubernetes journey:

The Kubernetes bit

Now add a kubernetes directory in the root directory of your project. It is here we will build our kubernetes files

The Configmap

In order for us to share some data, like the login to the postgres server, we use a configmap. I know that a secrets file might have been better, but that will be the subject of another article.

Now add a ‘db-configmap.yaml’ to the kubernetes directory and edit it like this:

apiVersion: v1

kind: ConfigMap

metadata:

name: db-secret-credentials

labels:

app: postgresdb

data:

POSTGRES_USER: 'eventadmin'

POSTGRES_DB: "webeventspython"

POSTGRES_PASSWORD: "secret1234"

HOST: "postgresdb.default"

PORT: "5432"A short explanation:

- The name of configmap is db-secret-credentials

- In the data section the different values are defined.

Starting minikube

If you are using minikube then you can deploy it right away. First make sure minikube is started by typing:

minikube statusIf it is not started then type:

minikube startDeploying the configmap

Make sure your local kubernetes cluster is running and type:

kubectl apply -f db-configmap.yamlAnd it will be deployed.

Another minikube tip

In a new terminal type:

minikube dashboardThis is the webinterface to the minikube cluster, so you can see exactly what is happening

Storage

The database will need some persistent storage, that is why Kubernetes has persistent volumes and persistent volume claims, and we will define them as follows. First of all start by adding a ‘db-persistent-volume.yaml’ to your kubernetes directory:

apiVersion: v1

kind: PersistentVolume

metadata:

name: postgresdb-pv

labels:

type: local

app: postgresdb

spec:

storageClassName: manual

capacity:

storage: 8Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data/db"Again a short explanation:

- The name of the volume is postgresdb-pv.

- The storageclass is ‘manual’ because we will manually provision the storage

- Storage is set to 8G, this might be much for our purposes so you can reduce it if your like

- Since we we are on a single node for now, the ReadWriteOnce access mode blocks access from other nodes.

- Next is the hostPath, i.e. the local path

Now we we can add the claim, add ‘db-persistent-volume-claim.yaml’ to your kubernetes directory:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: db-persistent-pvc

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

# the PVC storage

storage: 8Gi

Here we request a volume of at least 8G and with ReadWriteOnce storage. We will use in our database deployment.

You can deploy the storage with the following command:

kubectl apply -f db-persistent-volume.yaml

kubectl apply -f db-persistent-volume-claim.yamlThe Database Deployment

Now that we have storage and the configration set up, add a ‘db-deployment.yaml’ file to your kubernetes directory:

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgresdb

spec:

replicas: 1

selector:

matchLabels:

app: postgresdb

template:

metadata:

labels:

app: postgresdb

spec:

containers:

- name: postgresdb

image: postgres

imagePullPolicy: Always

ports:

- containerPort: 5432

envFrom:

- configMapRef:

name: db-secret-credentials

volumeMounts:

- mountPath: /var/lib/postgres/data

name: db-data

volumes:

- name: db-data

persistentVolumeClaim:

claimName: db-persistent-pvcThere is a lot to unpack here:

- The name of the deployment is postgresdb

- We use one replica

- The containername is postgresdb, which simply always pulls the latest postgres image from docker. At the time of writing this is version 15

- The deployment opens port 5432, the standard postgres port

- Next it gets all the data, like databasename and username from the configmap

- Then it mounts the /var/lib/postgres/data directory on the persistent volume claim, which in our case simple means it is hosted on a local path on our machine

As you can see it is not that difficult. Now deploy this with:

kubectl apply -f db-deployment.yamlThe Database Service

The web-api needs to be able to access the database, through a service. So, add a db-service.yaml to your kubernetes directory:

apiVersion: v1

kind: Service

metadata:

name: postgresdb

labels:

app: postgresdb

spec:

ports:

- port: 5432

selector:

app: postgresdb

A short breakdown:

- The service opens port 5432, the standar postgres port

- Through the selecter it is connected to the postgresdb pod or pods. Since this is an internal service, no LoadBalancer is needed.

Deploy this with

kubectl apply -f db-service.yamlThe Web service

The database pod is now deployed, and is accessible from other pods. Now we can start deploying our web api. Add a ‘web-deployment.yaml’ to your kubernetes directory:

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-deployment

spec:

replicas: 1

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: web

image: <your dockerhubusername>/webeventspython:v1

imagePullPolicy: Always

env:

- name: "HOST"

valueFrom:

configMapKeyRef:

key: HOST

name: db-secret-credentials

- name: "POSTGRES_USER"

valueFrom:

configMapKeyRef:

key: POSTGRES_USER

name: db-secret-credentials

- name: "POSTGRES_PASSWORD"

valueFrom:

configMapKeyRef:

key: POSTGRES_PASSWORD

name: db-secret-credentials

- name: "POSTGRES_DB"

valueFrom:

configMapKeyRef:

key: POSTGRES_DB

name: db-secret-credentials

- name: "PORT"

valueFrom:

configMapKeyRef:

key: PORT

name: db-secret-credentialsThis looks like quite a lot, but is not that bad:

- The name of the deployment is web-deployment

- The label app:web is used to identify the deployment later on when we build the service.

- Next we define the image to be used, which is the image you just pushed, and we always want the latest, hence the imagePullPolicy.

- Next we define the environment variables. As you can see there are many ways to get environment variables from a configmap

You can deploy this by typing:

kubectl apply -f web-deployment.yamlThe Web service

A webservice is nice, but we need to access it, and for that we need a service. Add a ‘web-service.yaml’ to your kubernetes directory:

apiVersion: v1

kind: Service

metadata:

name: web-service

spec:

selector:

app: web

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancerLine by line:

- The name of the service is web-service

- The selector is used to connect this service to web-deployment

- Port 80 is open to the outside, and since our web api is running on port 8000 that is our targetport

- Because this is an outside facing service, the type is loadbalancer

Deploy this with:

kubectl apply -f web-service.yamlThat is it, if you can test it out now.

Testing it on minikube

If you are running on minikube you can test it by typing (in a new terminal):

minikube service web-serviceThis will open a browser, add /admin to the URL, and enter ‘admin’ and ‘secret1234’ or whatever combination you defined in your dockerfile. Now you are presented with the console. Try adding events, and listing them by replacing the /admin with events.

Conclusion

My first objective was to find out about the Developer Experience with Django Rest Framework. I have have to admit it is quite good, though a bit quirky at time. The generic views are a real lifesaver.

While working on this, getting the whole thing to run on Kubernetes was a bit of a challenge. Deploying a setup like this with Kubernetes is actually quite a pleasure.

If you want to see the whole code, complete with .dockerignore and the .gitignore files, please visit my github

Plans

Since I quite liked the experience I plan to write further articles on:

- Having the server run behind an nginx server in a different pod

- Adding more authentication

- Deploying using helmcharts

- Deploying this setup to Azure using CI/CD, either to an AKS or an Azure Container App

If you have any more ideas, please leave your ideas in the comments.